Human rights for AI

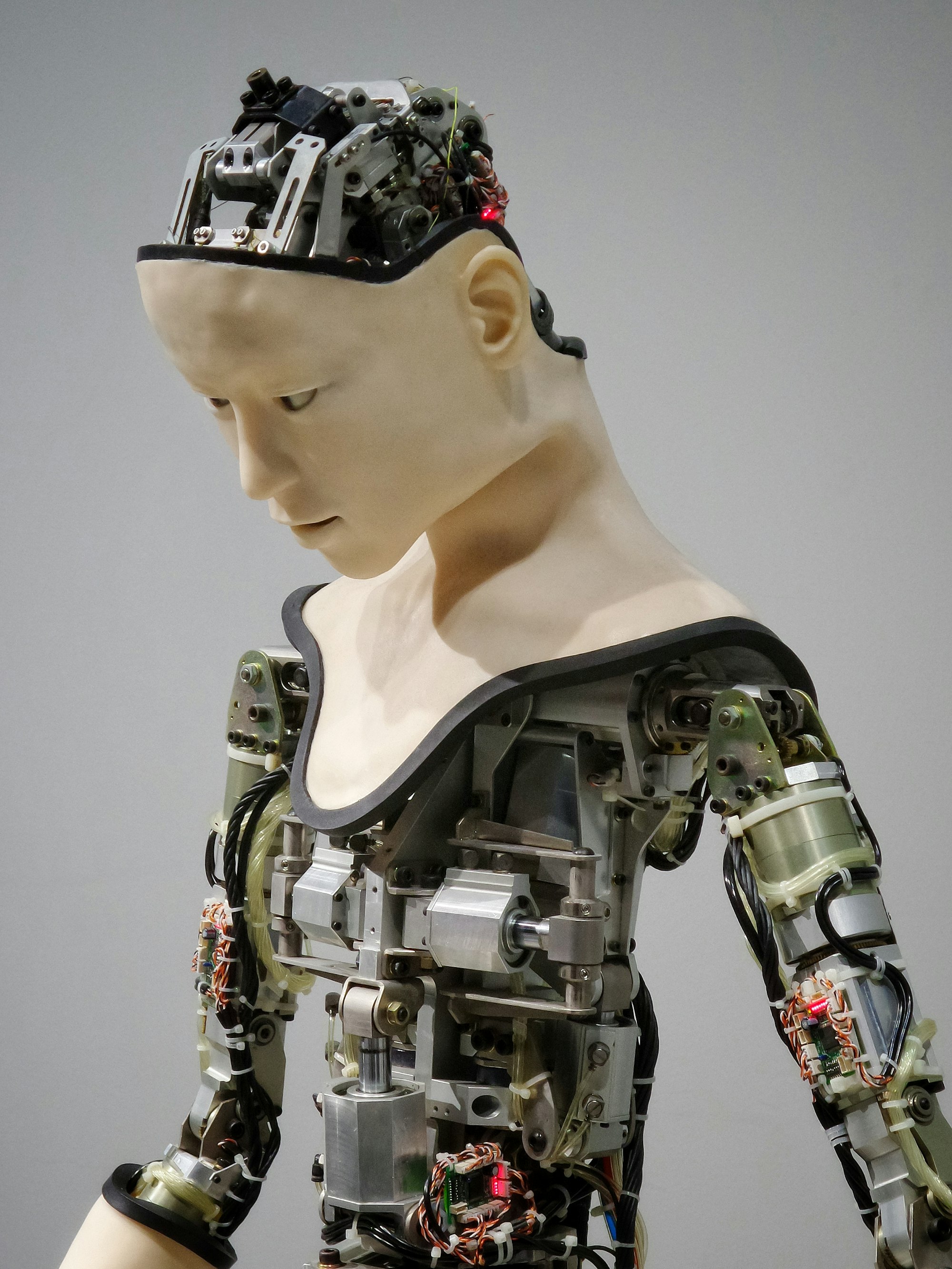

AI can learn to recreate faces. How long before it recreates personalities? If I were to hurt and torture this AI, and it begs me to stop. Should I care?

If I were to clone you, does your clone deserve same rights as you?

For a big part of history humans have believed that animals do not have "souls" and are not self-aware. They don't love. They don't laugh. They don't have complex emotions like us humans do.

In the recent years that perception has changed. Many studies have confirmed that animals do have emotions. They cry, laugh, love, kiss. They're not very different from us.

I recently watched "Oxygen" on Netflix, where [*spoilers*] the protagonist is a clone of a person who's mission is to colonize an exoplanet. The clone had to go through a major existential crises. It had the burden of memory and existence of the original's life.

An Algorithm To Replicate You

AI can learn to recreate faces. How long before it recreates personalities? In the process of learning to replicate, it'll also replicate your flaws, memories etc.

That AI is essentially a clone of you. The only difference is that you are carbon based and it is silicon based.

Does any AI with sufficiently high complexity deserve rights? If I were to hurt and torture this AI, and it begs me to stop. Should I care? After all its just code. It's emotions aren't real, does AI really feel pain? It replicated emotions as a by product of learning to be you.

What is pain?

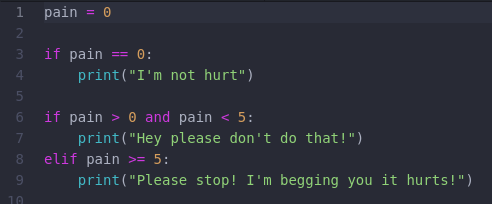

If I have simple python program like this:

pain is variable that stores how much pain the AI feels. For a human, this could be measured in electric signals your brain receives. In either case, its a signal.

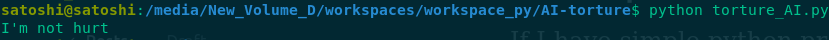

If I run this program on my laptop with pain = 0 my machine writes "I'm not hurt":

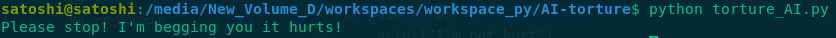

If I run this with pain = 7 my machine says "Please stop! I'm begging you it hurts!"

This is obviously too simple. However, what if instead of hard coding what it prints on screen is from a piece of text generation AI? If a conversational AI starts saying this would you feel anything? Is the AI feeling anything?

We humans feel hurt because our brains are programmed to do so. If it receives a pain signal from its body, it reacts and feels pain. Pain is a by product of evolution that helps us survive. If we didn't feel pain, we'll not do anything when in danger. Though how is this any different from the piece of code I wrote?

The difference between a person and this piece of code is:

- Humans are carbon based, the machine is silicon based

- The complexity in our intelligence is much higher

If yes - what would be the legal framework for AI rights? Humans are intelligent and feel emotions. If an AI starts doing that what rights do we give this AI? Does it have a right to free speech? Does it have a right not be tortured?